PEPR-IA Days in Paris Saclay

PEPR IA Days Experience

Since I am currently a PhD student under the Apating Project, which is part of the France 2030 PEPR-IA (Priority Research Programme and Equipments on Artificial Intelligence), I had the opportunity to attend the PEPR IA Days, held from March 18 to 20, 2025, at Centrale Supélec, Saclay. It was a great opportunity for the AI community to connect and exchange ideas. There were many interesting topics and posters presented, and I was particularly happy to see several project presentations on AI.

Keynote Highlight

One of the highlights for me was the keynote talk “Sustainable AI – a key asset for European sovereign AI?” by Steven Latré, VP of R&D AI & Algorithms at IMEC and a professor at the University of Antwerp. He addressed the crucial question of what kind of AI Europe should aim to build, especially considering the competitive landscape between China (e.g., DeepSeek) and the US (e.g., ChatGPT). It was made clear that Europe will not follow China’s approach of using AI to violate privacy and human rights. On the other hand, the US appears to be moving forward with fewer regulatory constraints.

Steven Latré suggested that European AI is strong in three key areas: sustainability, scalability, and safety.

- Sustainability – The computational power (FLOPs) required for AI systems has been doubling annually. While Moore’s Law has traditionally allowed hardware to keep pace with increasing demands, the rise of deep learning since around 2010 has caused the need for FLOPs to grow by a factor of 100 every year. Current GPU architectures are now hitting their limits, as the computing capacity per unit area has remained stable since the first delivery of GPUs.

- The next major challenge in AI lies in the co-design of hardware and software.

- Latré illustrated this with a metaphor: the human brain can compute at exascale levels while consuming only 20W of power, whereas modern exascale computers require 20MW — making them one million times less efficient than the human brain. Thus, in my opinion, sarcastically why not using brain instead.

- The solution, he argued, lies in tightly integrated hardware and software development, as seen with DeepSeek.

- Scalability – Latré noted that AI models need to become larger and more complex to support advanced applications like Agentic AI, where multiple language models work together.

Interesting Projects and Posters

Several projects and posters related to embedded AI caught my attention:

1. Flopoco Project

- Flopoco is an application-specific arithmetic hardware generator that produces VHDL code.

- It allows for customizable precision, from single to double, as well as custom precision by specifying the values of the exponent and mantissa.

- Flopoco can generate single-variable functions such as exponentials, trigonometry, and logarithms.

- A particularly interesting feature is that Flopoco optimizes constant multipliers using an adder tree.

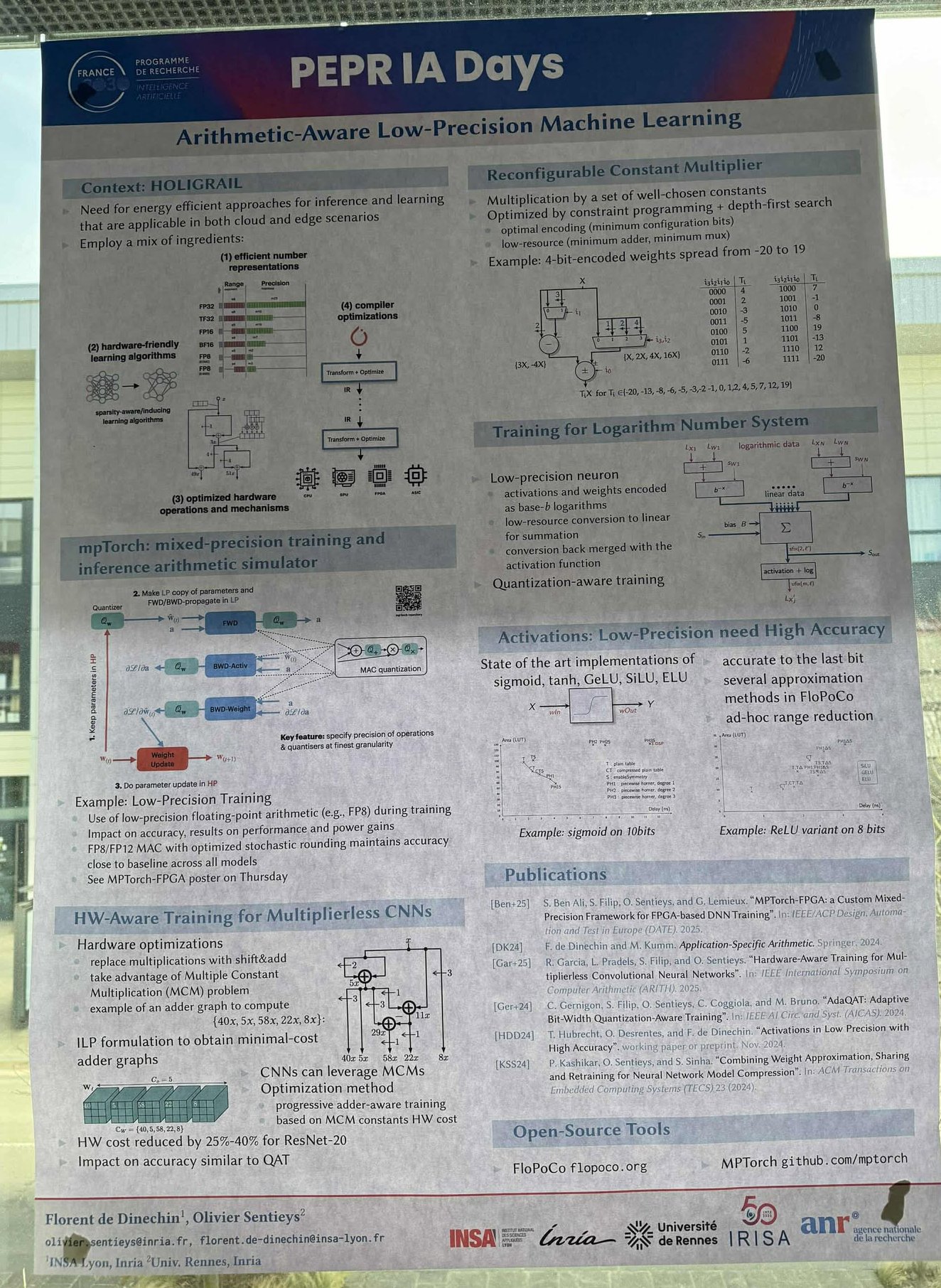

2. Arithmetic-Aware Low-Precision Machine Learning

- Developed by the same authors as Flopoco, this project explores the use of low-precision number representations for machine learning.

- Machine learning models are trained with low-precision formats like FP8 and evaluated for accuracy and power efficiency. Results show that FP8 and FP12, with optimized stochastic rounding, maintain accuracy close to baseline levels.

- Low-precision weights are used to build hardware, benefiting from reduced constant multipliers.

- Reconfigurable constant multipliers are employed to multiply sets of well-chosen constants.

- High-accuracy activation functions are implemented using Flopoco.

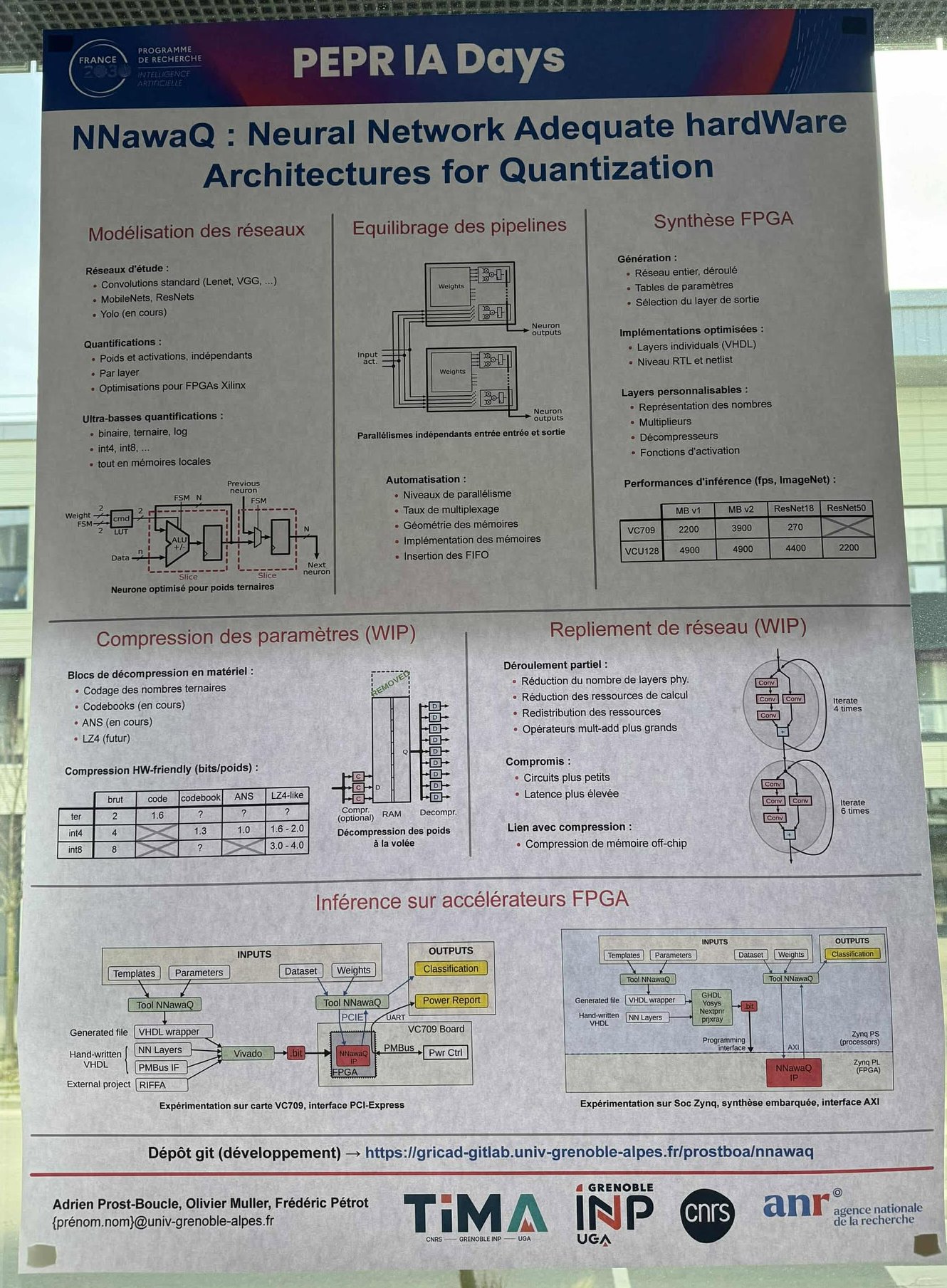

3. NNawaQ

- NNawaQ focuses on quantized convolutional neural network (CNN) inference on FPGAs.

- It supports customizable quantization bits (binary, ternary, or INT4).

- It employs pipelining and parallelism between input and output layers.

- The weights are stored in a FIFO, and NNawaQ introduces weight compression and hardware decompression using LZ4 to reduce memory footprint.

- Experiments were conducted on VC709 and Zynq SoC platforms.

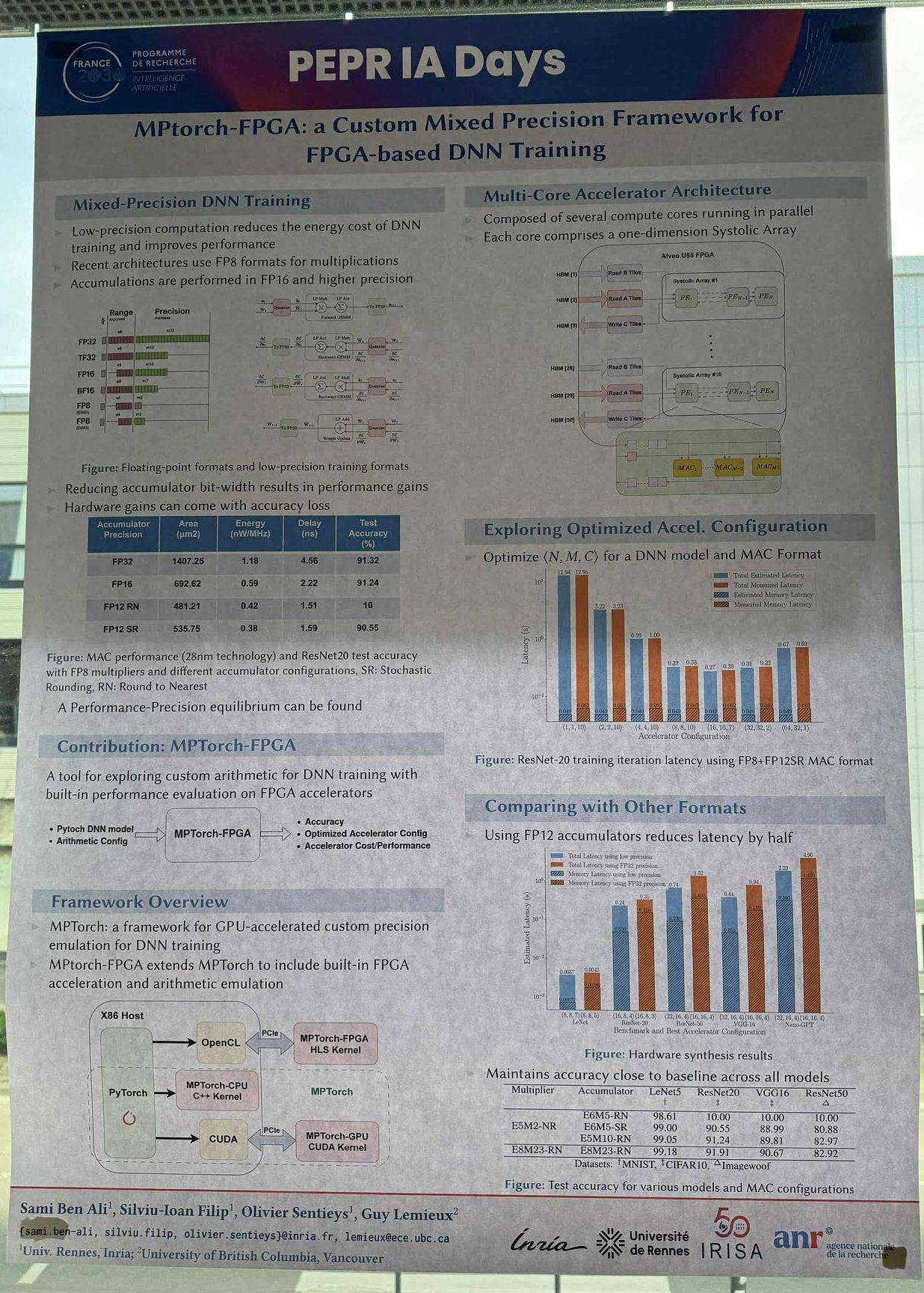

4. MPTorch

- MPTorch is designed for mixed-precision deep neural network (DNN) training.

- The key idea is that lower precision reduces both energy consumption and computation time.

- Recent architectures use FP8 for multiplication and FP16 for accumulation.

- The results showed only a 0.8% accuracy drop between FP32 and FP12 (with stochastic rounding), while achieving a 3x improvement in area, energy, and delay.

- The framework integrates PyTorch with OpenCL and CUDA for FPGA/CPU/GPU execution.

- On FPGAs, the architecture is based on a systolic array for each processing element (PE).

5. Greedy Training for Neural Networks

- This project applies neural networks to solve partial differential equations (PDEs) using Physics-Informed Neural Networks (PINNs).

- The key advantage is that it avoids overfitting by focusing only on parts of the solution where the error is highest.

6. Butterfly Factorization

- Butterfly Factorization is inspired by the Fast Fourier Transform (FFT) and involves hierarchical and recursive reduction of computations using a butterfly pattern.

- This approach reduces memory usage, accelerates computation, and improves generalization.

Summary

Attending the PEPR IA Days was an insightful experience. It provided me with a broader perspective on the challenges and opportunities in AI, particularly in sustainable and scalable AI. The keynote by Steven Latré and the various projects on embedded AI reinforced the importance of co-designing hardware and software to address the growing computational demands of AI.

Related Posts: